How has SEO Changed in the Last Decade?

Has SEO Really Changed?

One reason I don’t make many blog posts aside from updating our Risky Link Encyclopedia page is that fundamentally SEO has not changed much in the last decade. To be clear, there have been some huge changes with the internet as a whole but these changes have had only limited impact from a Google search engine optimization perspective.

On the positive side, onsite SEO is still about making quality content and organizing it in a logical way for Google. Off-site SEO is still about acquiring links within certain organic (or organic-like) parameters. Functionally, it does not matter if a link is paid for or earned as long as it looks the same. Links from strong pages, topic and location relevant sites, and sites with good TrustFlow (or other quality metrics) remain a priority for strong and safe link profiles.

On the negative side, all the same risk issues still need to be avoided as nearly a decade ago with Google’s Penguin 3 update. Generic spam links remain a risk even in low numbers and should be avoided/disavowed. Safe commercial anchor text has mostly settled into a norm of under 15%. Higher quality link types including blog post links remain effective. Similarly PBN (Private Blog Networks) remain effective, as long as they don’t have footprints. As for the mid-low quality links like web 2.0 user created blogs (e.g. blogspot), forum posts on relevant forums, moderated blog comments from topic-relevant pages, etc… these can still be effective but are generally still weak and only safe in low numbers. As a result, both Whitehat and even Blackhat SEO has barely changed in a long time.

Search Engine Optimization generally has not changed much in the last decade, particularly compared to the massive changes in the early 2000s when Search Engines themselves were new.

What Has Changed?

1. Social Media

The internet itself has changed and in particular has become increasingly centralized around social media. Has this meaningfully changed SEO? With respect to Google Search Engine Optimization, social media is barely a factor. The reason for this is because Google intentionally ignores most social media and doesn’t even index many social media sites (reddit being a big exception). The reason for this is because these sites are potential competitors to Google. But as a result, Google-centered SEO per se has remained mostly unaffected by the social media revolution. Of course, social media marketing or social media optimization is its own thing entirely, but this isn’t related to Google.

2. SEO Automation – Spam/Blackhat

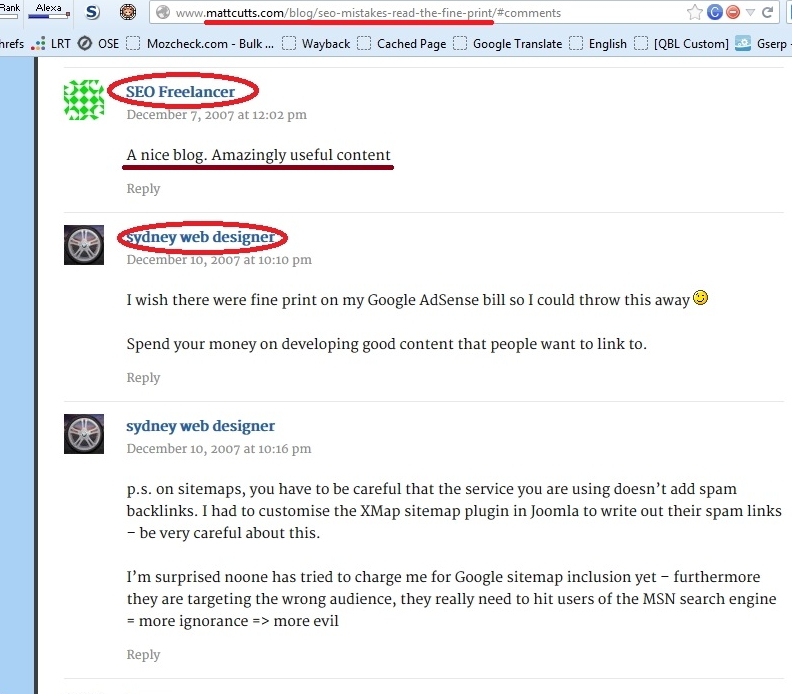

Another gradual change is around SEO automation. Widespread SEO automation has a long history starting around 2010 with automated content posting systems like Xrumer (forum posts and forum profile link spam), Scrapebox (blog comment and guestbook spam), and later GSA and other multi-platform spam posters, combined with CAPTCHA breakers to get around anti-spam measures. Autoblogs using spun content (automated unique content) were also a thing though these mostly morphed into private blog networks. Therefore, on the blackhat side, SEO automation has had huge effects though in most cases they are short-lived as Google’s algorithm updates have penalized many of these overused link types and methods.

3. SEO Automation – Third Party Scraper and Statistics Sites

The other major way SEO has changed is with 3rd party fully automated content scraper and webstat sites that may be linking out to hundreds of thousands of millions of sites each. In fact, today we often see the vast majority of links in a link profile come from fully automated 3rd party networks of websites. These sites aggregate large amounts of content or data from a large amount of websites and create their links and content automatically. There are also huge networks of image scraper sites that take images from other sites and link back to the source. Sometimes these scraper networks will be created on subdomains so theoretically a single domain can host hundreds of different scraper subdomain sites. We’ve seen an epidemic of this recently. Obviously Google doesn’t like automated content scraper sites since they are, 1. Link networks of multiple sites (most of them) and 2. Large amounts of duplicate content or else automated spun gibberish (autoblogs).

Automated scraper networks has radically changed the landscape for link profiles but it would be a huge mistake for them to penalize based on these third-party link types. These sites and links have no association with the sites they are linking out to. It’s just automated systems. Nearly all sites on the internet will get linked to by these automated scraper links, webstat sites, and other automated link types. If you, dear reader, own a website, there is a nearly 100% chance that your site is being linked to by large networks of automated content scraper and webstat sites as well as a whole host of other automated linking.

Should you be worried about backlinks from scraper networks? A little but not very much. The reason this type of SEO automation hasn’t fundamentally changed search engine optimization is that Google’s algorithm attempts to ignore these automated 3rd party link types. They aren’t supposed to be counted negatively (penalty) but instead simply ignored, as if they were disavowed. John Mueller and others from Google have stated many times that Google attempts to detect and ignore scraper sites and networks. Of course, attempting to ignore and properly doing it is another issue and sometimes there are mistakes. We even had a client who received a (very short) manual penalty due to the webspam team accidently confusing automated image scrapers as intentional blackhat spam. But in general automated scraper networks have minimal effect on SEO. These networks should still ideally be disavowed just to make sure they are being ignored, but in general it’s not too concerning since most of them should already be ignored even without disavowing them.

In Conclusion

The internet has changed significantly in the last decade with the explosion of Social Media and rapidly increasing semi-automation (spammer links) and fully autonomous automated content systems (scraper sites). But for the most part, these changes have had little impact on how SEO actually works in relation to Google.

About Daniel Delos

Daniel is the founder of Rewind SEO and has worked on hundreds of Google penalty analyses and recovery projects, recovering both manual and algorithm penalties. He has almost two decades of total SEO experience and has worked almost exclusively on risk auditing and penalty prevention/recovery since 2014.

- Web |

- More Posts(14)